- Cerebral Valley

- Posts

- Introducing Qdrant Cloud Inference ⚡️

Introducing Qdrant Cloud Inference ⚡️

Plus: Founder & CEO André Zayarni on how retrieval has become the execution path for agents...

CV Deep Dive

Today, we’re talking with André Zayarni, Founder and CEO of Qdrant.

Qdrant is a vector-native search engine built specifically for powering memory systems in AI agents. Unlike general-purpose databases with vector add-ons, Qdrant was designed from the ground up to support fast, filtered, real-time retrieval — giving developers full control over how agents store, access, and reason over context across steps, formats, and modalities. In practice, that means enabling agents to stay grounded, coherent, and responsive — even across long-horizon tasks or dynamically evolving memory.

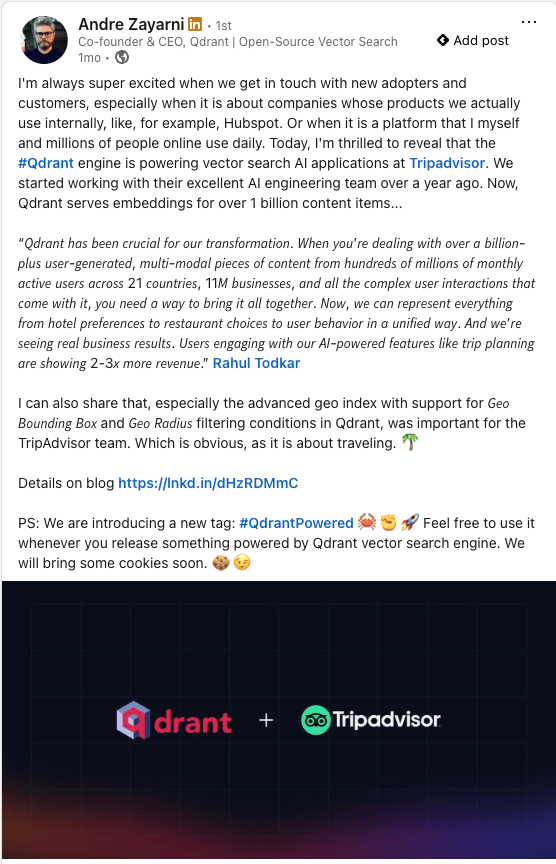

Qdrant is already powering production deployments at companies like Tripadvisor, HubSpot, Deutsche Telekom, Dust, and Lyzr, supporting everything from agentic travel planning and customer support to complex multi-agent systems running over thousands of concurrent tasks. With native support for hybrid search, multimodal embeddings, and in-cluster inference, Qdrant solves the latency, filtering, and context relevance problems that break most real-world RAG and agent pipelines.

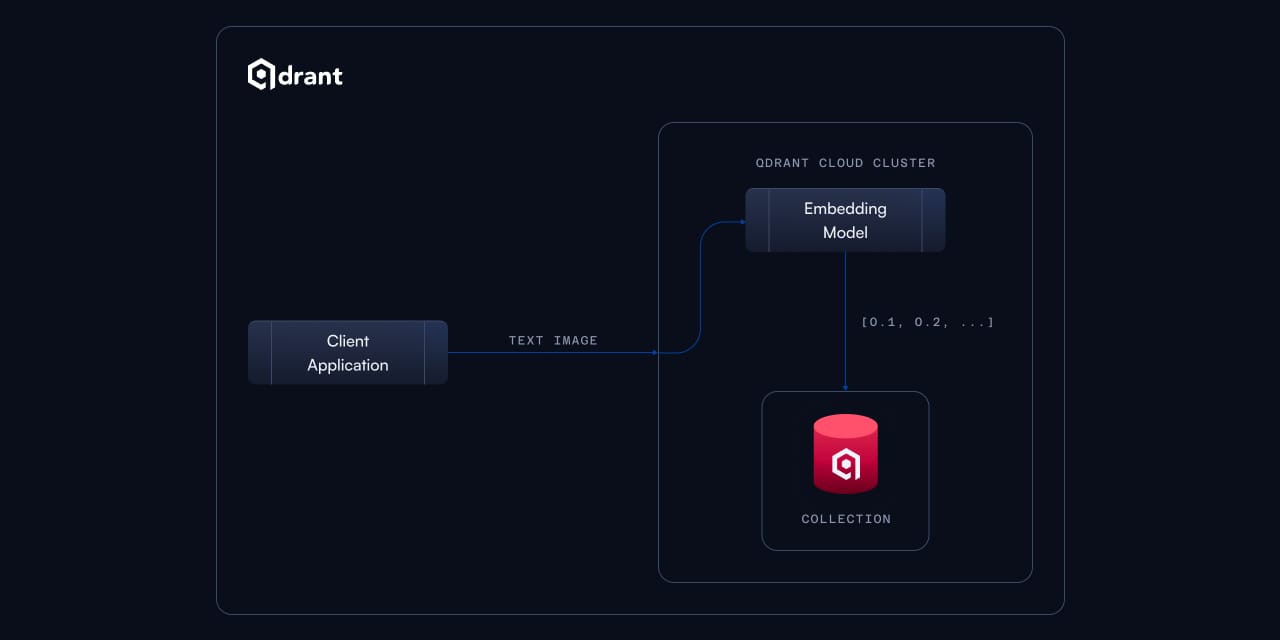

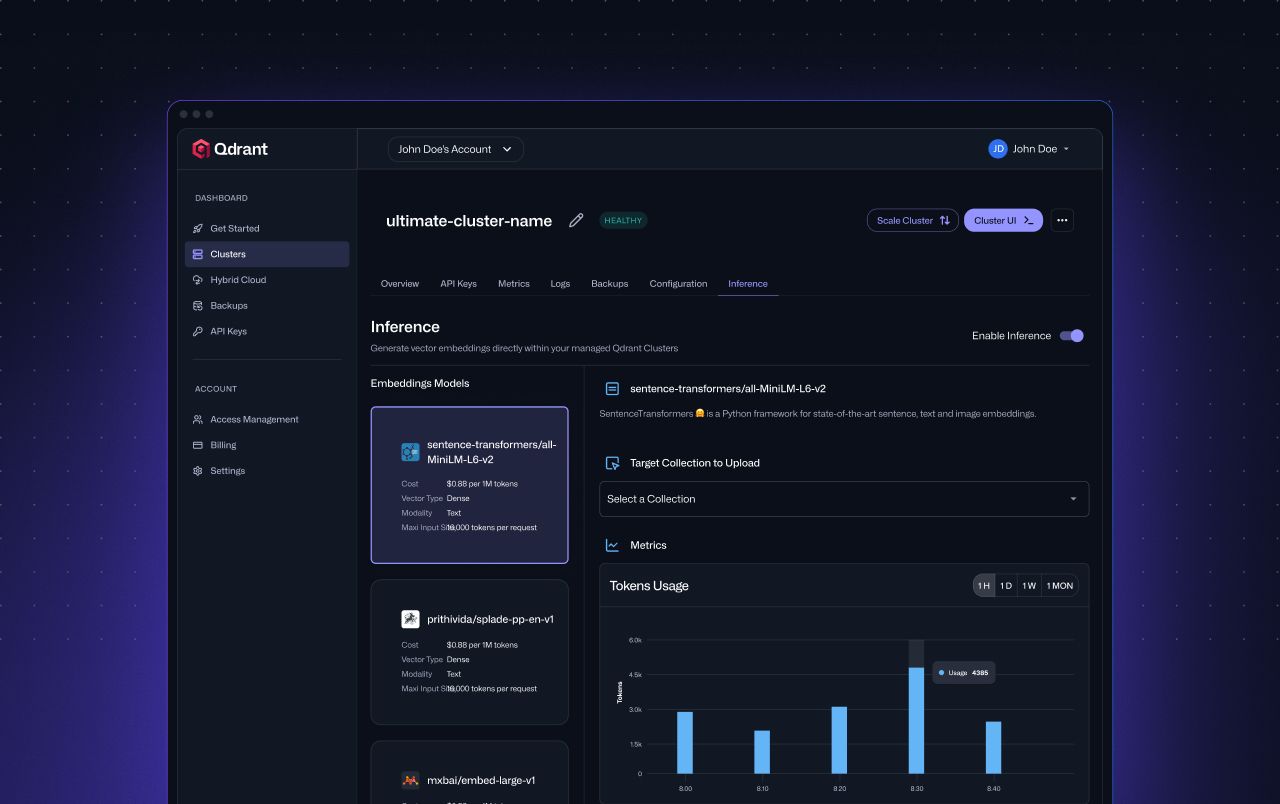

This week, Qdrant launched Qdrant Cloud Inference - where users can generate, store and index embeddings in a single API call, turning unstructured text and images into search-ready vectors in a single environment. Directly integrating model inference into Qdrant Cloud removes the need for separate inference infrastructure, manual pipelines, and redundant data transfers.

In this conversation, André breaks down how retrieval has become the execution path for agents, why memory systems need to be tightly integrated and multimodal, and what Qdrant is building to push vector-native infrastructure forward in 2025 and beyond.

Let’s dive in ⚡️

Read time: 8 mins

Our Chat with André 💬

André - welcome back to Cerebral Valley! Let’s start high level - how do you define the concept of “memory” in the context of AI agents? What are the most important components that make up a useful memory system?

This is one of the most common questions we’re hearing from teams building agents right now: how should we think about memory? It’s easy to assume a powerful model is enough, but in practice, agents often fail because they forget what they’ve seen, lose context, or hallucinate missing facts. That’s a memory problem.

In this context, memory is the infrastructure that lets an agent persist and retrieve knowledge over time, enabling it to reason across steps, stay grounded, and adapt. A useful memory system must be semantic (to match meaning, not just keywords), low-latency (to keep agents responsive), real-time updatable (so memory can evolve as the agent operates), and context-aware (so retrieval stays relevant to the current task). Without these memory capabilities, agents drift. With them, they stay coherent, grounded, and capable.

There’s been a lot of noise about retrieval being the key to unlocking more capable agents. What’s your view on that? Is retrieval the bottleneck, or is it something else entirely?

Retrieval is very often the bottleneck and it’s a core part of the memory system, not separate from it. You can store everything an agent needs to know, but if it can’t retrieve the right context at the right time - across steps, formats, or modalities - it fails. That’s where many real-world issues show up: missing context, slow recall, irrelevant results.

Especially for agents operating over long tasks or multimodal data, retrieval needs to be fast, filtered, and tightly scoped to stay useful. It’s also where most latency and architectural complexity arise, particularly when embedding, storage, and filtering are stitched across multiple services. That’s why we’ve made retrieval a first-class focus for Qdrant: real-time, hybrid, and multimodal search, fully integrated into the memory layer.

Qdrant has supported RAG for a while now. What are the unspoken challenges in building real-world retrieval pipelines for agentic use cases, especially ones that need to work in production?

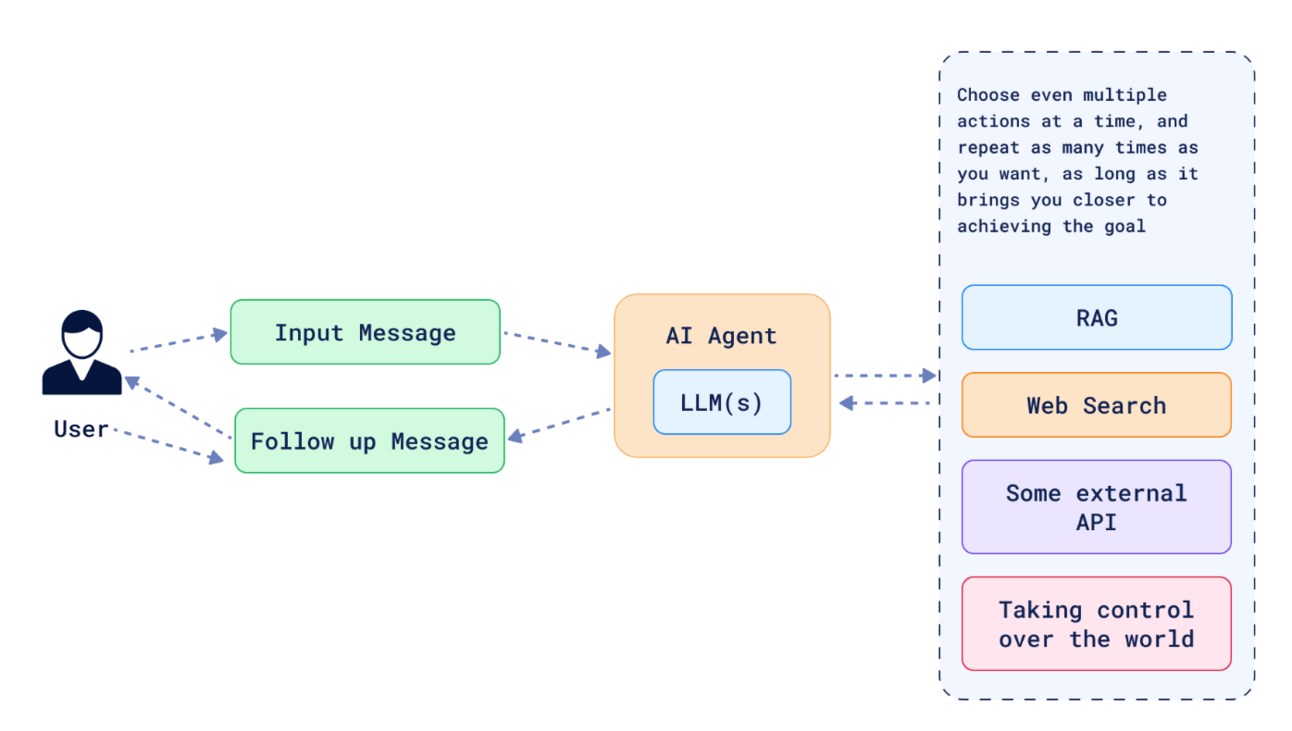

The challenges are mostly invisible until you try to scale. In development, retrieval feels linear: chunk text, embed it, query it. But agentic systems break that pattern. These aren’t static RAG flows - they're non-linear, multi-step processes where retrieval is just one tool among many. Agents decide when to query, what to retrieve, and how to use it. They may generate new memory mid-task, require multimodal input, or reformulate queries on the fly. This dynamic behavior defines what we call Agentic RAG: retrieval embedded in a larger loop of planning, reasoning, and adaptation.

In this setup, latency and accuracy compound. A single slow or imprecise retrieval doesn’t just delay a response, but it can derail the entire reasoning path. Agents often run multiple steps in sequence, and even 100ms per step adds up fast. If the context retrieved is off, the model hallucinates. At scale, that creates bottlenecks in both performance and output quality.

Not all vector databases are built the same. What’s uniquely difficult about powering memory systems for AI agents compared to more traditional use cases like search or recommendations?

Not all vector databases are built the same. General-purpose databases with vector extensions are designed for structured data and transactional workloads - not real-time retrieval. They treat vectors as an add-on, using architectures that weren’t built for high-dimensional, low-latency search. That works for static use cases like basic semantic search or recommendations. But AI agents need something else entirely: fast, filtered retrieval that updates in real time as memory evolves across steps.

Qdrant isn’t a general-purpose database with vector support. It’s a native vector search engine, built from the ground up to make retrieval the core, not an add-on. We support hybrid queries (dense, sparse, filters), filterable HNSW, GPU-accelerated indexing, multivector embeddings, and instant insert + query. These aren’t bolt-ons. They are the architecture. That’s what lets agents stay grounded, adapt quickly, and operate at scale. With Qdrant, our goal is to give developers the most complete and flexible vector search engine available today - purpose-built to support responsive, context-aware agents, and tunable to the specific memory, retrieval, and performance requirements of any AI agent architecture.

Our vector-native search engine is already powering production AI agent systems at companies like Dust and Lyzr. Dust scaled to over 5,000 dynamic data sources with Qdrant, reducing latency and memory pressure through quantization and advanced sharding. Lyzr saw a 90% drop in query latency and 2x faster indexing, delivering fast, reliable retrieval across hundreds of concurrently running agents. In addition, we power real-time Agentic RAG applications at scale in enterprises like Tripadvisor’s AI Trip Planning - built on billions of reviews and images, HubSpot’s Breeze AI assistant delivering real-time, personalized responses with deep contextual awareness, and Deutsche Telekom’s multi-agent platform, operating across 2M+ AI-driven conversations with shared context.

All these teams didn’t just need vector support - they needed a vector native search engine infrastructure purpose-built for real-time, adaptive memory. And that’s exactly what we’ve built.

When you think about where Qdrant fits into the AI agent stack, how would you describe its ideal role? Is it the memory layer, the retrieval backbone, or something else?

The way we see Qdrant in the AI agent stack is as the retrieval backbone and context engine - not just where knowledge is stored, but where it’s shaped into something the model can actually use. Models don’t reason in a vacuum; they operate on the context you give them. That makes retrieval the real execution path for agents.

In this sense, Qdrant plays a central role in what’s increasingly being called context engineering: the practice of dynamically structuring, filtering, and optimizing context so the agent can plan, decide, and act effectively. We don’t just help agents remember, but we help them stay situationally aware. From hybrid queries and multimodal data to real-time updates and task-specific retrieval, Qdrant is designed to give developers full control over what the model sees - step by step, with precision.

Let’s shift to Qdrant Cloud Inference. What made you decide now was the right time to launch embedding generation natively inside the database?

Yes, we’re excited about the launch of Qdrant Cloud Inference. It’s designed to solve a specific problem we’ve seen in production: embedding pipelines that rely on external APIs introduce unnecessary latency, network costs, and complexity - especially in systems where new data must be searchable immediately.

With Qdrant Cloud Inference, embeddings are generated directly inside the network of your Qdrant Cloud cluster and indexed in the same step. That means no external API calls, no waiting on batch jobs, and no syncing between services. It’s a single, integrated flow that reduces time-to-search from seconds to milliseconds.

It’s a critical upgrade for real-time AI systems. Agents generate new memory mid-task. Multimodal apps pull context from different embedding models. In all of these, the ability to go from raw input to indexed vector instantly isn’t a nice-to-have - it’s becoming more and more table stakes.

And it’s easy to use: no separate tooling, no orchestration required - just a single API call to embed, store, and search. With curated dense, sparse, and image models, Qdrant Cloud Inference gives developers the speed and control they need, without adding complexity.

Why was it important to build your own embedding infrastructure directly into Qdrant Cloud?

Early in our journey, we heard a recurring theme from our community: embedding pipelines were slowing teams down. Developers loved Qdrant’s search performance, but connecting it to third-party embedding APIs - or running inference locally - added latency, complexity, and infrastructure overhead. In production RAG and agent systems, those small inefficiencies compounded into real barriers.

That’s what originally inspired us to build FastEmbed, our lightweight, ONNX-based Python library for local inference. It was our first step toward simplifying the embedding workflow, and it resonated quickly. FastEmbed now has over 2,000 GitHub stars and is used by thousands of developers building dense, sparse, multivector, and multimodal pipelines - optimized for speed, flexibility, and ease of use.

But the feedback kept coming: could we take it even further? Could Qdrant handle the full flow - embedding through retrieval - in one system?

That’s exactly what led us to build Qdrant Cloud Inference. It brings embedding generation directly into the network of your Qdrant Cloud cluster - no external APIs, no egress latency, no manual coordination between services. Embedding and indexing now happen in a single step, dramatically improving speed while simplifying developer operations.

This isn’t just about convenience. It’s part of a bigger vision for Qdrant Cloud: to give developers a fully managed, production-grade vector search platform that’s both easy to use and built to scale. With native inference in the loop, Qdrant Cloud becomes a complete system for real-time, semantic, multimodal, and hybrid search.

Between Qdrant Hybrid Cloud, GPU indexing, and now Inference, Qdrant is pushing hard on infrastructure innovation. What’s the north star guiding these decisions? What’s the product vision you’re building toward?

We’ve always been laser-focused on making vector search excellence the foundation of everything we build. Our goal is to offer the most customizable and performant vector search engine available, one that gives developers precise control over indexing, filtering, hybrid retrieval, and latency. That’s why we continue to invest deeply in infrastructure: from GPU-accelerated indexing to native on-cluster inference and flexible Hybrid Cloud deployments. Each of these capabilities is purpose-built to make working with vectors easier, faster, and more production-ready - no generic layers, no bolt-ons. Just one system engineered from the ground up for vector-native performance at scale.

You're now the only vector DB offering native support for both text and image embeddings. Why do you believe multimodality is going to matter more moving forward?

Multimodality matters because 90% of enterprise data is unstructured - and it doesn’t come in just one format. It’s a mix of emails, PDFs, images, videos, logs, charts, scanned documents, and more. To build AI systems that can actually reason across this information, retrieval engines need to move beyond text-only assumptions.

That’s why we built native support for both text (dense and sparse) and image embeddings directly into Qdrant. Whether it’s enriching a support agent with screenshots, powering document search with scanned PDFs, or enabling agents to process visual memory alongside text, our goal is to make it seamless to search across all data types.

Zooming out —do you see the rise of AI agents leading to a new class of vector DB requirements? What do you think will define the next-generation memory layer?

Yes, agents have made retrieval a first-class problem. As I mentioned earlier, it’s about more than just accuracy. It’s about responsiveness, filtering, and the ability to update context on the fly. Those demands break most general-purpose systems.

And with agents moving beyond the cloud into VPCs, hybrid environments, and even physical systems like robotics, the memory layer has to be deployable anywhere without compromising on performance. This is exactly where Qdrant stands out. Our vector-native architecture lets us deeply optimize for search speed, filtering, and recall quality in ways that bolt-on solutions can’t match. It’s a real-time engine built for retrieval, wherever and however your agents operate.

Final one— what’s one thing the Qdrant team is building or exploring for H2 2025 right now that you’re personally really excited about?

We just also released Qdrant v1.15, and it’s a big step forward in continuing our focus on deep vector search performance. It includes major upgrades to full-text capabilities like phrase search, multilingual tokenizer, and injection speed improvements. We’ve also introduced new binary and asymmetric quantization modes that bring powerful tradeoffs for storage and recall, especially for lower-dimensional vectors.

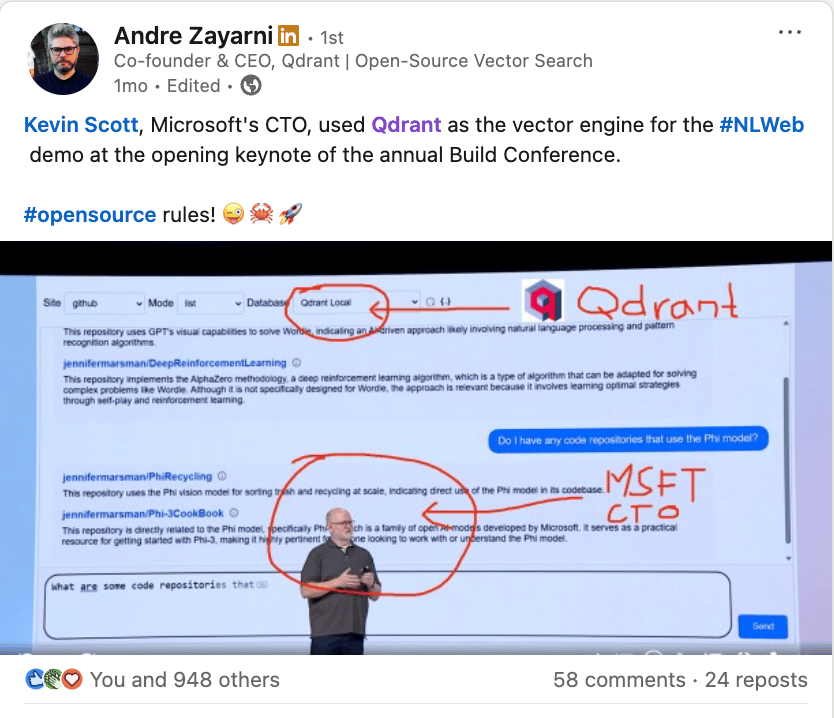

What excites me most is that we’re still just scratching the surface of what vector search can enable. We see a real opportunity not just to improve retrieval, but to reshape how people experience search across the web. Our work with Microsoft on NLWeb is one example of that: giving developers a lightweight way to build natural language interfaces that connect directly to fast, context-aware vector retrieval. Overall, we’re staying laser focused on vector search, not trying to be everything, but going deeper than anyone else. Every release, every optimization, every integration is designed to push the limits of what real-time, high-precision, scalable retrieval can do. That’s the path we’re on, and we’re just getting started.

Conclusion

Stay up to date on the latest with Qdrant, follow them here.

Read our past few Deep Dives below:

If you would like us to ‘Deep Dive’ a founder, team or product launch, please reply to this email ([email protected]) or DM us on Twitter or LinkedIn.